If you've been tasked with driving AI adoption in your organization, you're probably facing a familiar challenge: how do you actually measure whether it's working? Unlike traditional software rollouts, AI adoption is messier, more experimental, and harder to quantify.

Before diving into measurement, it's crucial to have the right structure in place. As our founder Gianluca writes in his article on operating models for AI transformation, you need clear accountability, AI Champions in each business unit, and a task force that owns the strategy. If you haven't set up your operating model yet, start there first.

Assuming you have the structure in place, this guide breaks down the essential frameworks and metrics you need to track AI adoption meaningfully.

The first wave of AI measurement was predictable: companies tracked what was easy to count. Number of licenses purchased and training completion rates together with daily usage. These numbers do go up. However when we look closer, the 80% "daily usage" rate is mostly people using AI to rewrite emails they could have written themselves in the same amount of time, or to summarize documents they should be reading. So nothing more than high utilization of low-value tasks is waste with a better UX.

This pattern repeats across organizations. Usage metrics divorced from use case quality create the illusion of transformation while actual work remains the same.

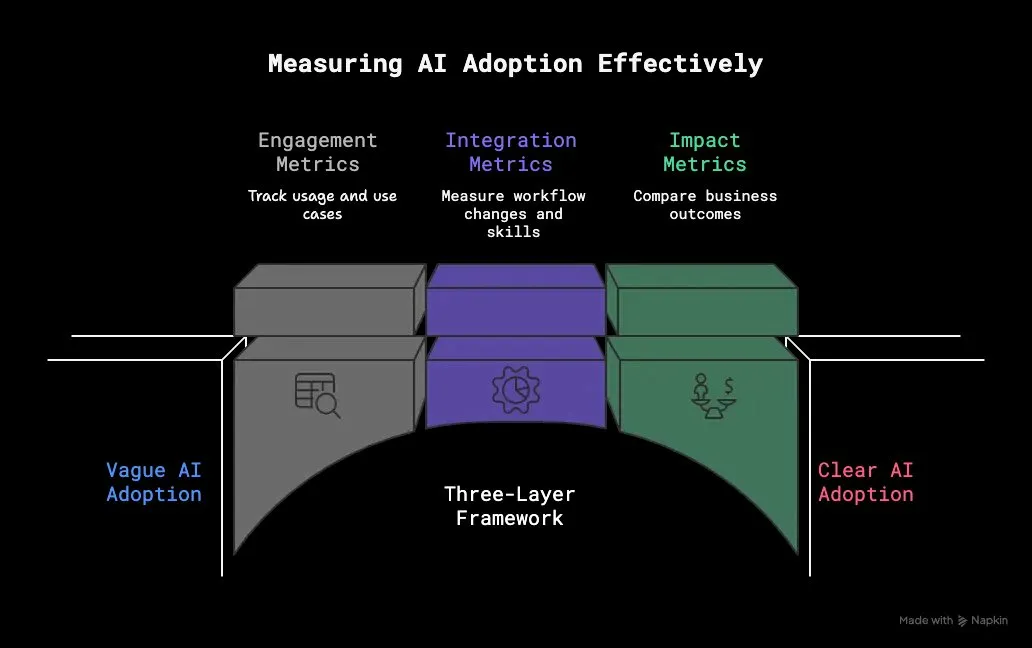

Meaningful measurement requires looking at different dimensions simultaneously.

Engagement (are people showing up?), integration (are they changing how they work?), and impact (are business outcomes different?). The companies that figure this out track what people are using it for, how it's changing their work, and whether it matters to the business.

The baseline question: is anyone using these tools? But the critical follow-up: what are they actually doing?

What to track:

The awareness vs. reality: We cannot stress enough how important this work is for a realistic picture. This is where revealed versus stated preferences matters. Survey someone about their AI skills and watch the Dunning-Kruger effect in action. This gap is about understanding where training and change management are missing the mark. The mixed approach combining what people say with what they actually do reveals the true adoption picture.

The pattern in successful adoption: usage rates climb steadily, use cases diversify over time, and the gap between what people say they can do and what they actually do narrows.

Engagement measures whether people show up. Integration measures whether anything actually changes. This is the layer where most AI programs stall.

What to track:

Integration shows up as use cases multiplying within roles, processes being rewritten to assume AI exists, and Champions actively supporting teams with visible results.

This is where measurement gets difficult and necessary. Everything upstream is a proxy for this layer: are business outcomes actually different?

What to track:

Console analytics + survey feedback: Platform data shows what people do. Surveys show what they think they do and how they feel about it. The combination reveals both behavior and perception.

Formal tracking + informal indicators: Training completion rates and tool adoption metrics give you structure. Success stories and Champion observations give you texture. Both matter.

Short-term metrics + long-term impact: Immediate adoption numbers (who's using what this month) tell you about momentum. Sustained usage patterns (are people still using it six months later) tell you about stickiness.

Don't pick one method and bet everything on it. They layer quantitative and qualitative, formal and informal, immediate and sustained. The goal is signal from multiple angles.

Knowing what to measure is different from knowing how to measure it. Here's what works in practice.

The right measurement framework depends on what success means for your specific organization. At AI Academy, we work with companies at the start of their AI transformation to determine which metrics matter based on their goals, productivity gains, cultural change around AI acceptance, specific tool adoption rates, competitive positioning. The measurement approach should align with what you're actually trying to achieve and communicate.

We've seen most companies skip this step and regret it later. Document current productivity levels, process timelines, and output quality before AI enters the picture. Run initial surveys on AI knowledge and confidence.

The measurement system shouldn't be more complex than what you're trying to measure. Start with:

Measurement data has two audiences: leadership (for reporting) and users (for learning). Most organizations only serve the first audience. The companies that accelerate AI adoption share insights back to the people doing the work. Show aggregate patterns, highlight creative use cases from Champions, make power user approaches visible. When measurement drives learning, adoption compounds. When it only drives reporting, it stagnates.

A few patterns that keep appearing in organizations that struggle with measurement:

Measuring too early: AI adoption takes months to show up in the data. Organizations that expect transformation in weeks make bad decisions based on it after.

Celebrating usage metrics while ignoring use case quality: What matters is both what people are doing, and how often they're doing it.

Trusting self-reported data alone: People consistently overestimate their own AI competence and underreport their struggles.

Ignoring the qualitative signal: Numbers don't capture breakthrough moments. Stories about how AI enabled something impossible before, or saved a critical project, matter. They're harder to aggregate but they're often the clearest signal that something real is happening.

Creating incentive systems that reward activity over outcomes: When you measure and reward usage volume, people game the system. They'll use AI wastefully to hit targets.

Treating low adoption as a measurement problem rather than an execution problem: When metrics show low adoption or poor use case quality, the instinct is to measure harder. The actual problem is usually somewhere in training, tool selection, or culture. Measurement surfaces the problem; it doesn't solve it.

After 18-24 months of deliberate work, organizations that figure out AI adoption show some consistent patterns:

But the clearest signal of success od all is when AI stops being a special initiative. When people stop talking about "using AI" and just talk about their work getting done faster or better. If you want our team to help you understand how to measure adoption reach out!

—

What's the difference between measuring AI adoption and measuring AI ROI?

AI adoption measurement focuses on whether people are using AI tools and how those tools are changing their work behaviors. ROI measurement focuses specifically on financial returns—revenue increases or cost reductions directly attributable to AI. Adoption is a leading indicator that should eventually contribute to ROI, but strong adoption doesn't automatically guarantee positive ROI if people are using AI for low-value tasks.

How long does it typically take to see meaningful AI adoption metrics?

Expect 3-6 months to see reliable engagement patterns and initial integration signals. Meaningful business impact metrics typically require 12-18 months of sustained effort. Organizations that expect transformation in weeks often make premature decisions based on incomplete data. AI adoption is a marathon, not a sprint.

Should we measure AI adoption at the individual, team, or organizational level?

All three levels matter, but they tell different stories. Individual metrics reveal personal skill development and usage patterns. Team metrics show workflow integration and collaborative applications. Organizational metrics demonstrate systemic change and business impact. The most effective measurement systems track all three levels and examine how they interact.

What's a realistic target for daily active users of AI tools?

There's no universal benchmark because it depends on your organization's structure and goals. A company with 60% daily active users doing substantive work demonstrates stronger adoption than one with 90% daily users mostly rewriting emails. Focus less on hitting specific percentage targets and more on whether the right people are using AI for high-value applications in their specific roles.

How do we measure AI adoption when people use multiple AI tools?

Track adoption at both the tool level and the use case level. Platform-specific metrics show which tools people prefer, while use case tracking reveals whether people are solving meaningful problems regardless of which tool they choose. The use case diversity matters more than which specific tool someone uses—if they're accomplishing five different types of work with AI, that's integration regardless of whether they use one tool or five.

What should we do if usage metrics are high but business impact is low?

This signals a use case quality problem, not an adoption problem. People are using AI, but for low-value tasks that don't move business outcomes. Investigate what people are actually doing with AI through surveys and observations. Then provide training on higher-value applications, share success stories from power users, and potentially restrict or discourage the lowest-value use cases while promoting better ones.

How do we attribute business outcomes to AI when many factors influence results?

Perfect attribution is impossible, but you can build reasonable confidence through several approaches: compare AI-using teams against similar non-AI teams, track changes in metrics before and after AI introduction, gather qualitative evidence from users about how AI contributed to specific outcomes, and look for patterns across multiple metrics that collectively suggest AI impact. Accept that measurement will be imperfect but still valuable.

Should AI adoption be tied to employee performance reviews?

Approach this carefully. Tying adoption to performance reviews can drive usage numbers up artificially without improving use case quality—people will use AI to hit targets rather than to improve their work. Instead, consider recognizing and rewarding demonstrated impact from AI use, contributions to AI knowledge sharing, or innovation in applying AI to solve real problems. Focus on outcomes, not activity.

How often should we measure and report on AI adoption?

Track engagement metrics monthly to catch trends early. Assess integration signals quarterly through surveys and qualitative reviews. Evaluate business impact semi-annually or annually, as meaningful change takes time to materialize. Over-measuring creates reporting burden without additional insight, while under-measuring leaves you flying blind for too long.

What metrics should AI Champions specifically be responsible for?

AI Champions should track adoption patterns within their business units: usage frequency, use case diversity, skill development among their team members, and concrete examples of AI-driven improvements. They should also monitor barriers to adoption—where people get stuck, what training gaps exist, and what process changes are needed. Champions are your eyes and ears on the ground; their insights combine quantitative patterns with qualitative understanding.