Google's Gemini 2.5 has introduced a groundbreaking new feature that has the potential to revolutionize online education: video understanding with the ability to transform video content into interactive applications. This capability represents a significant advancement in how we can engage with educational content.

YouTube has long been an invaluable repository of knowledge on virtually any topic, but its passive viewing format has limitations when it comes to learning complex concepts. Gemini 2.5's new feature bridges this gap by enabling the conversion of video content into hands-on interactive experiences, transforming passive consumption into active learning.

The process is remarkably straightforward:

What makes this feature truly innovative isn't just its ability to create applications—AI tools could already generate code—but rather how it conceptualizes interactive experiences directly from video content. The AI identifies the core concepts from the video and designs an interactive application specifically tailored to help users better understand those concepts through engagement rather than passive viewing.

Our first example comes from an AI Academy course on how to use ChatGPT effectively. The source video explains the CIDI framework (Context, Instructions, Details, Input) for crafting effective prompts.

Original Video: AI Academy CIDI Framework Tutorial

When fed into Gemini 2.5, the system generated a specifications prompt for an interactive drag-and-drop application that reinforces understanding of the framework:

Build me an interactive web app to help a learner understand the CIDI framework for writing effective prompts. The acronym CIDI stands for Context, Instructions, Details, and Input. The learner needs to remember what the four letters stand for and how they relate to one another.

SPECIFICATIONS:

1. The app should consist of four boxes, each representing one of the elements of the CIDI framework...

The resulting application presents users with four boxes representing each component of the CIDI framework, and a collection of keywords/phrases that users must drag into the appropriate boxes. This transforms what could have been passive memorization into an engaging sorting activity that reinforces understanding of each component's role.

When users correctly place items in the boxes, they receive immediate visual feedback, reinforcing their understanding through active learning:

The first iteration had a couple of issues:

First, the CIDI framework become CIDN in the application. Second, it wouldn't allow users to place phrases in incorrect boxes, which limits the learning experience. However, as noted, this could easily be addressed by either modifying the specifications prompt or asking another AI tool to edit the generated code.

To give a transparent representation of the tool, the following app is the result of a zero-shot prompt, it then includes the limitations listed above.

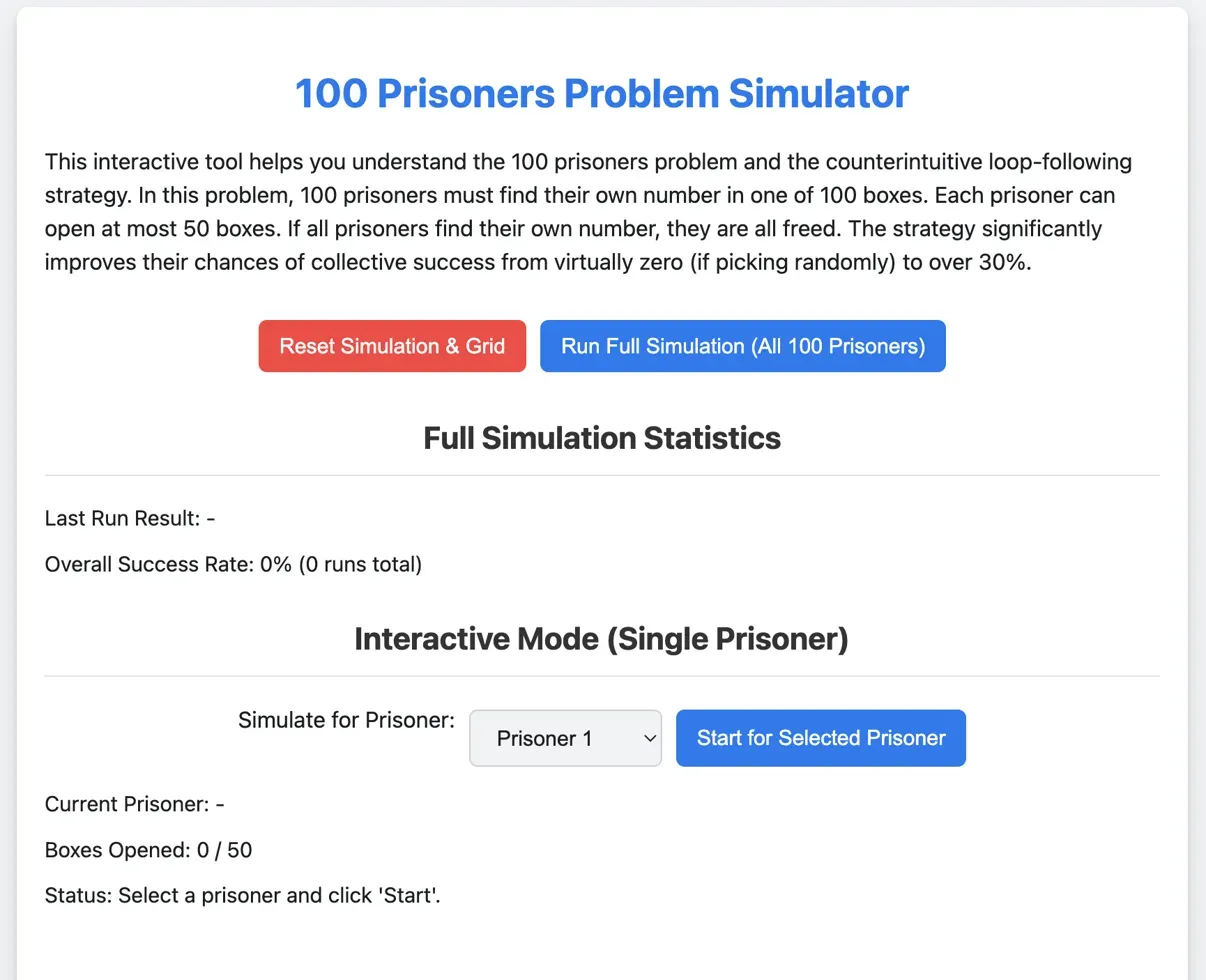

The second example demonstrates how the system can transform complex mathematical concepts into interactive simulations. The source is a Veritasium video explaining the famously counterintuitive "100 prisoners problem" and its solution.

Original Video: The Riddle That Seems Impossible Even If You Know The Answer

Gemini 2.5 generated specifications for an interactive simulator that allows users to experience the problem firsthand:

Build me an interactive web app to help a learner understand the 100 prisoners problem and the counterintuitive logic that allows them to improve their odds of success by following a particular strategy.

SPECIFICATIONS:

1. The app must visually represent a grid of 100 boxes, arranged in 10 rows of 10 boxes...

The resulting application creates a grid representing the 100 boxes, allowing users to either step through the process as a single prisoner or run a full simulation. By physically clicking through the "loop strategy," users can develop an intuitive understanding of why this approach works—something that can be difficult to grasp through explanation alone.

The interactive mode allows users to follow the strategy step by step, opening boxes according to the "loop-following" approach and seeing the results in real time:

The "Full Simulation Statistics" feature had some functionality issues, seeming to either only show "Success" or "Failure" results at every reset. This limitation somewhat reduces the application's educational value, as it doesn't properly demonstrate the statistical probability of success (approximately 31%) that makes this problem so fascinating.

To give a transparent representation of the tool, the following app is the result of a zero-shot prompt, it then includes the limitations listed above.

What makes Gemini 2.5's video-to-application capability revolutionary is how it transforms the relationship between content creators and learners. Previously, creating interactive educational content required either significant technical expertise or substantial investment in specialized tools and development resources.

Now, educators can focus on what they do best—communicating concepts through video—while the AI handles the transformation into interactive experiences. This has several profound implications:

Gemini 2.5's video understanding capability represents a significant advancement in how AI can enhance education. By bridging the gap between passive video consumption and active learning, it provides a new avenue for content creators to transform their existing educational content into interactive experiences that promote deeper understanding.

While the feature is not without limitations—as seen in our examples—the core concept demonstrates enormous potential for revolutionizing online learning. As the technology matures, we can expect even more sophisticated transformations that could fundamentally change how we approach digital education.

For those interested in exploring this feature, it's available to try for free in Google AI Studio.

Additional Resources:

What is Gemini 2.5's video understanding feature?

Gemini 2.5's video understanding feature is a new capability that allows the AI to analyze video content and transform it into interactive applications. The system can extract context from a video, create detailed specifications, and generate functional code to build an interactive experience that reinforces the concepts taught in the original video.

What kinds of interactive applications can Gemini 2.5 create from videos?

Gemini 2.5 can create various types of interactive applications from videos, including drag-and-drop learning activities (like the CIDI framework helper) and simulations (like the 100 Prisoners Problem simulator). These applications allow users to engage with concepts through activities rather than passive viewing.

Do I need coding knowledge to use this feature?

No, you don't need coding knowledge to use this feature. You simply paste a YouTube video link into Gemini 2.5, and the AI handles the analysis, specification creation, code generation, and rendering of the interactive application.

What are the current limitations of this technology?

The current limitations include some accuracy issues (like mislabeling the CIDI framework as "CIDN" in one example) and functionality problems (such as the statistics feature not working properly in the 100 Prisoners simulation). These first-iteration applications may require some modification of either the specifications prompt or editing of the generated code to function optimally.

Where can I try this video-to-application feature?

This feature is available to try for free in Google AI Studio. You can try it here: https://aistudio.google.com/u/1/apps/bundled/video-to-learning-app?showPreview=true